by ThinkCode | Nov 30, 2015 | Search Engine Optimization

It was officially launched in July and has been rolling out quite slowly (per Google). We all know an article came out on the 22nd of October, describing a possible change in the algorithm. Is anyone else seeing this decline, if so, how are you going about fixing it? Content?

Google Panda is being rolled out slowly. If you are seeing a slow decline, ideally use good industry tools, such as, BrightEdge’s ‘Data Cube’, Linkdex’s or Search Metric’s ‘Visibil

It was officially launched in July and has been rolling out quite slowly (per Google). We all know an article came out on the 22nd of October, describing a possible change in the algorithm. Is anyone else seeing this decline, if so, how are you going about fixing it? Content?

Google Panda is being rolled out slowly. If you are seeing a slow decline, ideally use good industry tools, such as, BrightEdge’s ‘Data Cube’, Linkdex’s or Search Metric’s ‘Visibility’ section, to see where your content is the poorest and then review and enhance your content by reviewing: quality and uniqueness of top category and product copy etc. Review duplicate content and take action. You can use canonicals (page-a is the same as page-b) and if you are really stuck and have a huge website you can consider “noindex” but the reality is you should not have any pages that are so poor you need to hide them from an engine, so focus on quality content. Remember, you will have to wait for the next Google Panda update to even be considered for a recovery, it’s unlikely you have been hit by a manual penalty as your decline will be more prominent.

Regarding RankBrain, don’t worry about this for rankings as it is in essence a add-on to index stemming and it’s like dictionary 2.0, if you like, to enhance synonym understanding and RankBrain does this through artificial intelligence. It uses the theory behind ‘connectivity queries’ (the other’s being ‘informational’, ‘navigational’ and ‘transactional’) and only helps engines understand the search query and, as a result, which type of SERP you should be displayed. Later it might analyze content much better but that will need to involve re-writing GoogleBot, so don’t worry just yet.

As per our thinking this time only a few people get affected by this update as there are not big reactions about this update. As the algorithm is about the content, you need to work on the content portion.

by ThinkCode | Nov 24, 2015 | Search Engine Optimization

Any link building business model where a client simply pays a company to build links to an existing site is destined to fail. Eventually the activities of that link building company will be detrimental to the client site.

The only viable long-term link building strategy is an extreme focus by the client on generating truly great content, tools, and resources on the their site that cannot be found on hundreds of other sites on the web which their targeted audience would find very useful, informative, and interesting. This will garner great natural links from authoritative relevant sites. Link building is dead. You have to figure out how to “earn” links, and earning links MUST start with great content.

The days of a business building a web site and then outsourcing link building to some 3rd party is dead (or at a minimum more risky than it ever has been). Businesses who do so are spending money to destroy their site. That money should instead be used for their marketing folks to generate great content, tools, and/or resources that will “earn” those great links.

Buying links is bad don’t do it. Good content is definitely the way to do it as well as outreach. Find influencers in your vertical get in front of them if you can and if you have good content you may get some shares or links that way.

Other ideas are to have an active social profile so you can push your content to go viral in hopes it will get picked up by quality sites.

Link building (especially when outsourced) in my mind has always has always referred to situations where some company who has already built out a site pays someone to build links to that existing site or to specific URLs on that site. The link builders are essentially handed a finished product and told to “build links” to it.

If that finished product (web site) does not have truly great content then the link builder will have been given an almost impossible task. This is why most end up building very low quality, unnatural links. They have no other option. What authoritative, relevant web site is going to want to link to some low quality, even mediocre content? None. So link builders are forced to “plant” links on other sites through some unnatural link building technique like blog commenting, article submission, directory submission, forum spamming or signature links, etc.

We think “link earning” is something quite different than the “build a web site and throw it over the wall to the link builders to promote” approach. With link earning, those responsible for getting links must broaden their involvement in the overall process of building out a site. They need to provide input to the content team with ideas of the types of things they might produce that would be link worthy.

The old paradigm for building a site (or its content) and getting it to rank by acquiring links must essentially be reversed. Rather having one team building out content and another building links, when earning links the content team and link building/SEO team should work together as one similar to the following:

* Figure out who your site’s targeted audience is

* Figure out which authoritative sites are frequented by large numbers of your targeted audience (these sites will almost always be very relevant to the site seeking to acquire links)

* Figure out what content, tools, and/or resources you can build on your site that (a) would be very useful to your targeted audience, (b) would be found by those related authoritative web sites to be something their visitors would be interested in, and (c) cannot be found on literally hundreds or thousands of other web sites on the Internet.

Once you have ideas for great content, tools, and/or resources that would attract (“earn”) natural links from those relevant, authoritative sites THEN and only then would the content team get to work building it. The content team should be told not to cut corners because even this approach only works if what is produced is truly great.

Once the content has been generated, acquiring natural links from those authoritative, relevant sites where your targeted audience can be found in large numbers is MUCH easier.We typically only requires that you contact the webmaster or person in charge of content on the site.

We get 10-15% success rate with this by simply email those decision makers to tell them about the new content.

by ThinkCode | Nov 18, 2015 | Search Engine Optimization

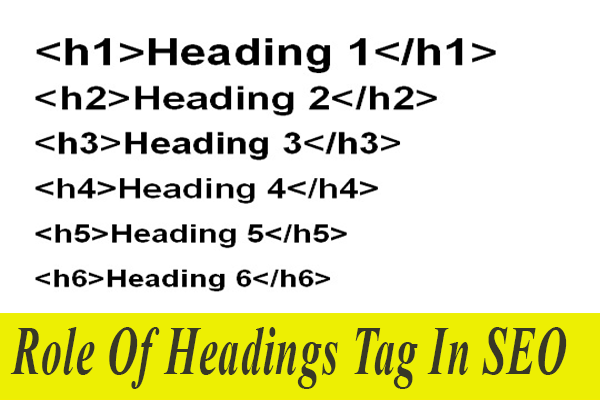

Combining great content with “proper” headings can definitely help rankings. It is rare that anyone would ever need H4, H5, or H6 unless they are marking up a very long piece of content. In most cases, a single H1 and possibly a handful of H2s is all that is needed. Perhaps H3s for longer articles/posts.

Many sites use headers elements for styling rather than to semantically markup the content. This has been done since the beginning of time. Google is aware of this, and they typically shy away from any “signal” that is noisy (which this one definitely would be). Demoting sites because they are using standard HTML elements in their markup seems ludicrous to me. Like many around the web, that SEM agency might be seeing a correlation between the use of H4-H6 and poor rankings, but we doubt very seriously if using h4, h5, and h6 is actually a “cause” for poor rankings.

[easy-tweet tweet=”H1 headings help indicate the important topics of page to search engines.”]While less important than good meta-titles and descriptions, H1 headings may still help define the topic of page to search engines.

We always prefer to use H1 to H3 as it gives good results. Still, h4 to h6 can be used for third priority keywords for good results.

by ThinkCode | Nov 2, 2015 | Search Engine Optimization

If your URLs are pretty much all indexed both with HTTP and HTTPS, we would seriously consider just making the entire site HTTPS since you’re already going to be redirecting essentially half of your indexed URLs. HTTPS is a positive ranking factor now, and Search Metrics has seen a significant lift on sites that converted to all HTTPS.

But ONLY do this if you can do it correctly which means modifying URLs for object references like images, CSS, JS files, etc. so that they too use HTTPS. Otherwise, your site will be throwing up warnings to you visitors that the pages contain unsecure content.

We would transfer the value of the HTTP pages over to their corresponding HTTPS pages. Any slight loss of PageRank/link juice should be offset to the gains resulting from going HTTPS. Once this is done, however, all new links would be pointing directly to the HTTPS version of the site’s URLs.

A 301 will pass an assumed 95% link equity from backlinks. The only thing better is to do nothing at all, but given the duplicate content implications and over indexation and confusion from Google attempting to index both URLs, this is still a good move.

But the purpose and functionality between the two are different. In this case, a 301 says that the HTTPS page was permanently moved to HTTP – in essence the two pages are merged into one. Visitors to HTTPS are sent directly to the HTTP page. Conversely, the canonical is the same as saying “I have two identical pages, I’m aware of that, but I only want Google to pay attention to one of them”. Both pages still respond, but the proper canonical tag is your indicator to Google that you know the HTTPS and HTTP page are the same – only index the HTTP page.

The other big difference between the two is how the Google bot crawls. Once they detect a URL with a 301, they will start to not crawl that URL again. They may revisit it for a while to ensure that you didn’t accidentally add the 301. But over time they will respect the 301 and not crawl that URL anymore. If your site is large, this is a good thing. What it does is forces Google to crawl the pages you care about – Google bot only crawls so many pages each time it visits. So by ensuring it is crawling the pages you care about, it becomes more efficient, notices your site changes more quickly, and gets quicker and more accurate in how it indexes your content. Conversely, with a Canonical tag there is a far greater chance that it’ll crawl the HTTPS and HTTP pages as they are all “valid” pages. Once it sees the canonical tag on the HTTPS pages however, it will stop loading that page and move to the HTTP version (per the canonical tag). Because of this, it has a greater number of pages to continue to crawl and thus is technically less efficient. If your site isn’t very large, then that’s not a big concern. If you have a large site this is something to consider. You can always get a good feel for how much of your site they are crawling by looking at the crawl stats in Google Search Console.

In summary, both solutions will solve your issue – but depending on if you are utilizing a proper SSL and thus need HTTPS to respond with the secure encrypted response internet users are starting to expect

by ThinkCode | Oct 26, 2015 | Search Engine Optimization

It’s completely normal to have links from external websites which are not related to your industry, no action required. You have to ask yourself, why would Google penalize you for this? The only danger is buying links or getting links from link farms or low quality spammy websites in large quantities, or low quality foreign language websites with spammy anchor text etc.

Google webmaster show warning and give massage in manual sections. so basis of manual action we can decide which type of penalty our website have, if your website has penguin penalty so we have to find out and filter spam backlinks of your website. We have to filter spam links, all links appear in Google webmaster which have link to your website.

If there are hundreds or thousands of them with identical anchor texts, you should use Google’s disavow links tool.

We have to keep some below points to filter spam links.

Irrelevant links

Links from penalized website

Porn and other spam website link

Links from paid sources

There are 3 great tools for assessing the quality of your backlinks.

Majestic.com will give you a list of most backlinks and an insight into their quality. You can export their lists into LinkDetox and their reports will tell you about sites that are outside the Google index, part of link rings, from suspicious neighbourhoods etc.

LinkDetox will help you create a disavow file for Google. And help you to analyze risk of new potential links

www.rmoov.com:- If you need to do some clean-up work, Rmoov has a great tool suite for sending emails to site owners. It’s the only way to stay sane if a client needs a big backlink clean up job.

We regularly run this process for a client who used to have around 50K backlinks and LinkDetox reported 90% were suspicious!

by ThinkCode | Oct 14, 2015 | Search Engine Optimization

Large sitemap.xml files should always be generated automatically. Typically it’s a CMS or ecommerce system on the backend. Typically there are plugins or modules available that will do it or you can write your own. We would HIGHLY recommend utilizing the <priority> element in the sitemap.xml to prioritize your pages so the engines know which pages are most important to be indexed, second most important to be indexed, third most important to be indexed, etc.

We agree that typically sitemap.xml files are not needed by most sites, they are indeed very helpful for most large sites. Ecommerce sites, for instance, may have hundreds of thousands of products. Those products might be linked to primarily from category pages DEEP in the site (could be 5,6,7, etc.) clicks from the home page.

Each site has both a crawl budget (amount of time and resources that the engine is going to dedicate to find pages on your site). The engines are typically also going to index only a certain number of pages on your site. Both of these are based primarily on the number and quality of backlinks the site has (i.e. PageRank).

So anything you can do to help the engines like Google crawl your site more efficiently (spend your crawl budget crawling pages you think are most important like product pages on ecommerce sites) and to influence which pages get indexed before others (using <priority> in a sitemap.xml) is advantageous for large sites EVEN if the site has great navigation.

Google has lots of limitations on sitemaps. 50,000 URLs per sitemap file. A sitemap index file can contain at most 50,000 sitemap.xml files. We believe the size of the sitemap.xml cannot exceed more than 50MB uncompressed and possible 10MB compressed.

How we can create/generate xml-sitemap file for the same.

Within Categories Options, set Frequency to Daily and Priority to 1.

Within Products Options, set Frequency to Daily and Priority to 0.8 (or anything less than 1 and more than we are about to set the CMS pages to).

Within CMS Pages Options, set Frequency to Weekly and Priority to 0.25.

Within Generation Settings, set Enabled to Yes, Start Time to 01 00 00 (01:00 a.m. or you can use another time that your traffic is at it’s lowest), and Frequency to Daily, and enter your e-mail address into the Error Email Recipient field.