by ThinkCode | Oct 6, 2015 | Search Engine Optimization

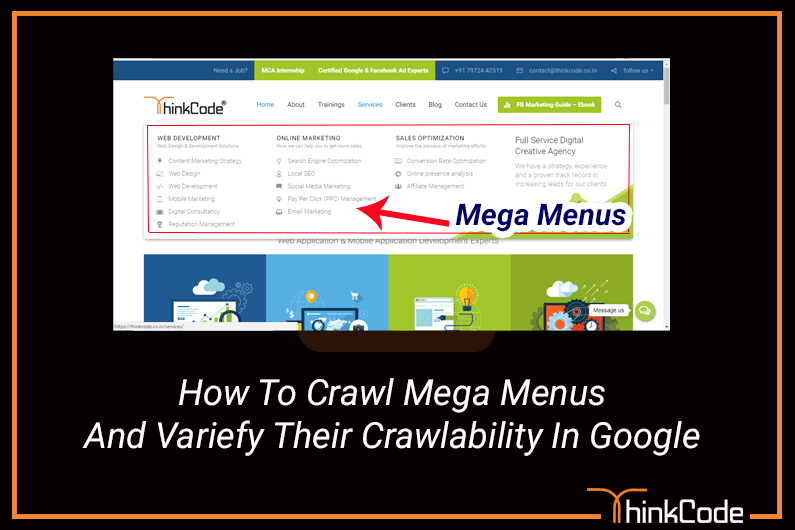

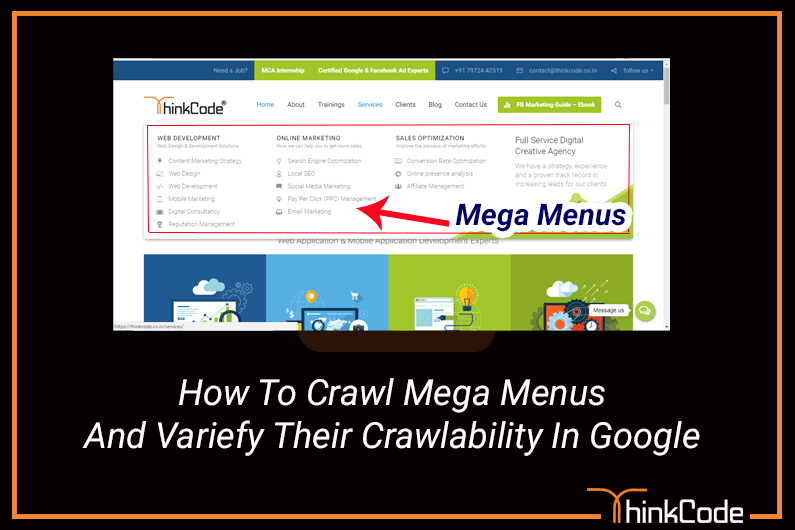

Google crawlers are designed from the beginning in a way to understand simple HTML codes, but when your mega menu created in complex code (Ajax or some other) will always be ignored though tried Fetch tool. Why it considers simple HTML code is that it needs things on your page simple for the users. Perhaps, complex code could be the culprit here, but wouldn’t cause any problem for your whole SEO practices and gaining good ranks.

Google should have no problem crawling mega menu assuming it is implemented with nested unordered lists (<ul> elements) containing anchor (<a>) elements in the various list items (<li>). If you’re doing something really weird like using complex AJAX calls or something to build them out then that might be an issue.

As per our knowledge the Fetch & Render tool in Search Console emulates what Google is now doing during the indexing process to determine what content is visible to users on initial page load. But Google also know what parts of the page are template (header, footer, left nav/sidebar if applicable, right sidebar if applicable, breadcrumbs) and what part of the page is the main body/content section. They fully expect that the top navigation might utilize dropdowns and that those dropdowns will always be hidden on initial page load. Just because they do not show in Fetch & Render does not mean that they are not being crawled for discovery and counted.

but if those webpages referenced inside the mega menu have been indexed and can be found in the SERP’s for the keyword phrases they’re targeting, then just because fetch and render doesn’t display them (within a menu) would not be an immediate cause of concern.

Apart from this make your menus logical and hierarchical if there are a lot of categories – users should be able to grasp the structure of the site from the way the menu is structured. They’ll feel more comfortable and stay on the site longer.

Google has been searching through JavaScript for over a decade to find URLs for discovery purposes. But in the last half decade or so they have gotten extremely good at actually executing JavaScript and its derivatives like AJAX. However, not all search engines are as adept at doing so. So if you want your navigation to be crawlable by all engines, it is recommended that you implement it with HTML. All search engines were designed to process HTML documents.

You can try at Weblogs to check where GoogleBot and other crawlers have been also Try awstats or Splunk.

by ThinkCode | Sep 24, 2015 | Search Engine Optimization

Social media marketing is currently broadly used to market site content on an online platform. It is performed on various platforms such like Google +, Twitter, Facebook YouTube, Linkedin.

There are many free social media marketing tools that are accessible on the internet, yet the difficulty is to search the ones that will serve your needs effectively.

Free Social Media Marketing Tools

Allows you to gain access and in addition deal with your Facebook, Twitter LinkedIn accounts from an individual dashboard. From the buffer, you can even arrange your post sharing timing and do the rest works.

This will assists you to deal with your Twitter account and it simple for you to track your most active followers, answer inquiries send by other Twitter users and also filter through direct messages or mentions that may require your attention.

This simple to utilize tool has a dashboard where you can simultaneously see and also deal with your social networking accounts on Twitter along with Facebook.

This tool assists you to save website blog, for example, articles and images for sharing on respective social media accounts. Once you installed Scoop.it, you can put the content URLs for sharing. You can also link this tool with HootSuite.

Like Scoop.it, you can save online articles for future posting. When you search for new content on the Internet, Storify permits you to classify your finds as indicated by its subject

This tool is a RSS feed reader that frequently checks for new content on your beloved news sites as well as Blogs

If you would like to know the modern styles and topics relating to your business, similar products and also competitors, Topsy is often a beneficial application for that intention. This will assist you with staying up with the latest with the most current news and happenings that can change your image

You can utilize these tools as consistently as you prefer. So here you can Use these social media marking tools as per your business objective and targeted audience.

by ThinkCode | Sep 21, 2015 | Search Engine Optimization

Google declared another new look and feel for the Google AdSense IOS and Android applications.

The new look runs with Google’s Material Design in addition to includes some more components.

Below are the new Features of Google AdSense

New metrics: View impressions, impression RPM, and CTR of your promotions.

Service regarding Hindi and also Malay: Work with your AdSense application in Malay and Hindi, which have as of late joined the AdSense family, and in 31 different dialects.

New reports for Android: Look at the execution of different ad styles, Ad networks, bid types as well as custom date ranges.

Today widget for iOS: Verify your earnings perhaps more rapidly in your iPhone in the Today see.

by ThinkCode | Sep 11, 2015 | Search Engine Optimization

Basically, robots.txt is a transmission between a website and the web search engine. It traces the guidelines for indexing your site and presents them to the web crawler. So fundamentally it defines that which element of your website Google’s permitted to index and which to be avoided.

It is very important don’t forget however in which some reliable search engines like Google will certainly realize along with respect the directives inside the robots.txt file, many destructive or perhaps low-quality crawlers may possibly neglect them completely.

Right now, if you’re wondering whether robots.txt is can be an overall must, the solution isn’t any. Your website will be indexed by the major search engines paying little mind to whether you have it or not.

Step by step instructions to create robots.txt for WordPress

Making robots. txt document is actually much simpler than one may believe. You should simply make a content document, name it robots.txt and transfer it into your site’s root index. Including the substance into the document is not difficult. You will find simply 6 instructions to create robots. txt:

User-Agent – decides the particular search engine crawler

Disallow – denies the crawler to index specific files or perhaps directories on the website

Allow – particularly permits crawling an item on the website

Sitemap – indicates the crawler the sitemap

Crawl-delay – specifies any time period of time in between search engines’ demands to your server.

Take note, if you want to arrange the same guidelines for those search engines like yahoo, bing utilizes asterisk (*) after the user-agent command

For example:

User-Agent: *

Allow: /wp-content/uploads/

Disallow: /wp-content/plugins/

Sitemap: https://thinkcode.co.in/sitemap.xml

If you use the robots.txt file properly, it helps search engine away from the content you don’t want to be seen. On the whole, you must remember, that there are no general standards on how to prepare the ideal robots.txt file and its particular content should be taking into account on the type of website you have.

by ThinkCode | Sep 7, 2015 | Search Engine Optimization

[easy-tweet tweet=”In SEO, there simply are no short cuts.”] The folks at Google have built an algorithm that is pretty darn difficult to trick and when one does, they fix the loophole quickly. We guess you might see better results from black hat tactics on sites, However, the yearly traffic this website get does not match the monthly traffic of Google.

Black hat SEO is just plain stupid. If someone is willing to just trash a site and start again then it will work. Many internet marketers do this and it does bring results, but a real business should never taint their brand with practices that can earn a Google slap down. Unless you are will to dump your brand, domain and everything and start from scratch you should never do it. But equally it can be just as silly to only follow Google’s webmaster tools Watching black hat marketers will help you find valuable shortcuts for your client. WE use grey hat techniques all the time that bring about great results, but you have to be smart about what you are doing and make sure you are keeping your client safe above all else.

With Google’s most recent algorithm updates, we are understanding that black hat tactics are essentially prehistoric and simply don’t work like they used to. For example, traditional black hat SEO is about loading your site with irrelevant but heavily searched terms, redirects etc. But now not only does onsite search term focus have less of an impact (whether done correctly or not), but also the algorithms are all about building a network around relevancy – incorporating both onsite and offsite search networking methods. The idea is to create an industry leadership around your site, making your brand highly relevant within the industry. Traditional black hat tactics are detrimental to building this leadership and actually work against it in the long run. From what we can tell this trend is only going to strengthen with further updates.

by ThinkCode | Sep 2, 2015 | Search Engine Optimization

There is no such “Right” amount for Google AdWords. It depends on many factors like targeted keywords, audience, goal, CPC of the keywords.

WE would keep in mind the value of your customer/potential client. Use that # as a targeted ad spend for the week/month.

Example: if a mold cleanup company is running Adwords and his average job is about $5,000 then he would use that as a decent ballpark monthly budget. You can certainly break this down to daily, but AdWords takes time so you may be frustrated at first. Also, keep in mind that the more competitive the keyword is, the more you’ll have to pay for visibility. If the ROI isn’t there, you may need to rethink your strategy/ ad platform. (ex, for certain industries, paid social ads may yield better results)

Best thing to do is Determine a budget, or at least an amount they are willing to spend. Determining volumes and rough amounts (CPC) can be done (in part) by using the Adwords Tools section. The Adwords Tools will more or less tell you an expected range that you can spend, but you also have to realize the tool is only as specific as you tell it to be. Many times the volumes and CPCs are merely a loose representation of what should be spent, as it doesn’t always take into account your exact audience.

In the end, we advise our clients, determine a budget and let me build you a campaign to maximize your spend. For instance, I manage a $50k monthly spend for 1 client, but a $2000 spend for another. Both have different audiences, but in each case, I explain what good results are and where we can potentially improve.

Having said all this, spending anything less than $50/day is more or less a waste of resources; even for a very regional company. This is because with $50/day they simply won’t get the recognition (impressions or CTR) they want/expect and it’ll sour the experience for both you and Google Adwords.

At thinkcode, If you are looking for the cheap solution then we are not the right choice, but if you are looking for Best Solution which provides the return on investment, then we would love to help you.